If you have been wondering about jupyter notebook and whether it is the right tool for your data work then you are in the right place. This interactive coding environment has quietly become the backbone of data science work around the world and after spending considerable time testing its capabilities I can honestly say it surprised me in several ways.

What started as a simple idea to combine code with documentation has evolved into something much bigger. Scientists researchers and developers now rely on jupyter notebook to turn raw data into meaningful insights. The ability to write code see results immediately and document everything in one place feels like having a complete data laboratory right in your browser.

What Is Jupyter Notebook?

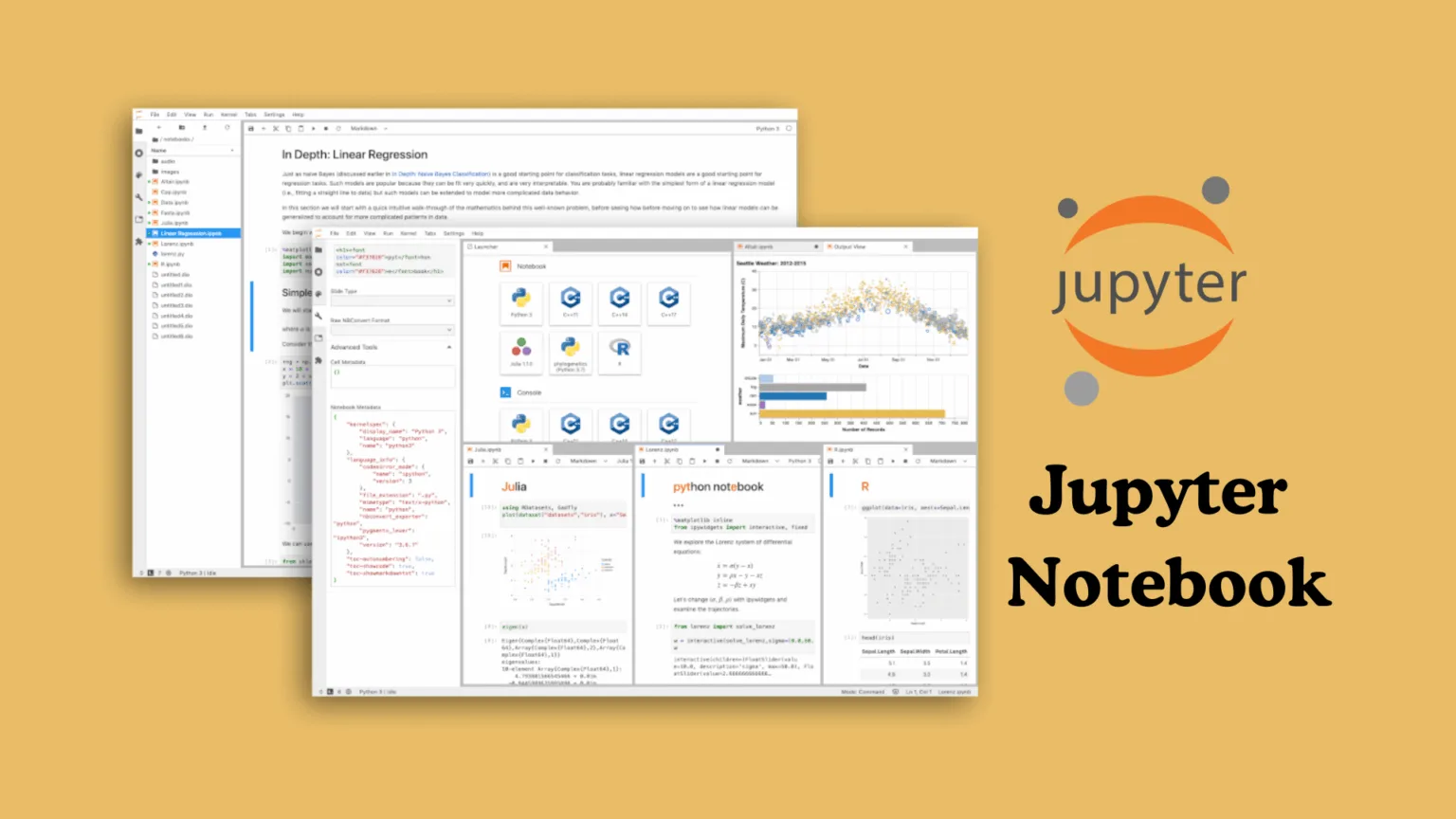

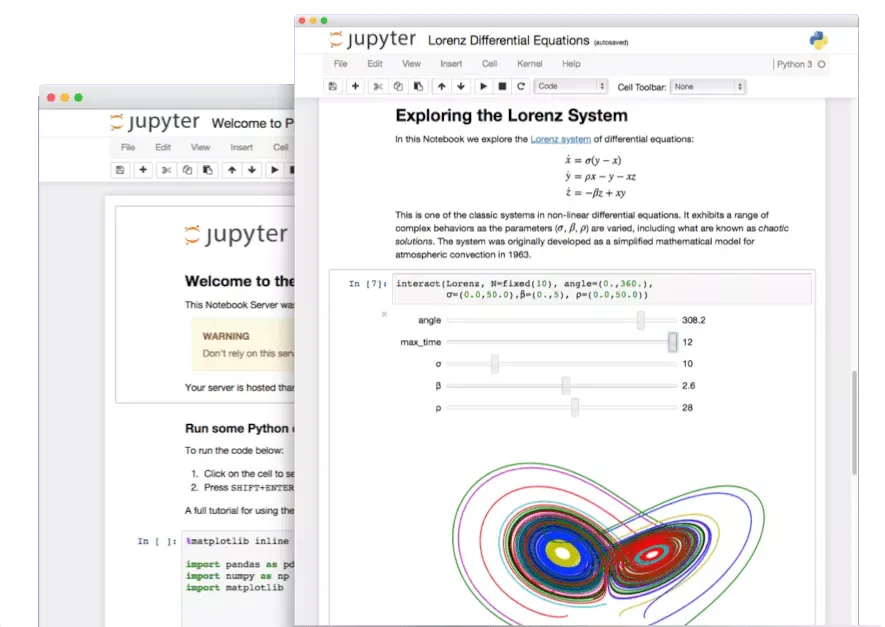

Jupyter Notebook is an open source web application that lets you create documents containing live code, equations, visualizations and explanatory text all in one place. The name comes from three programming languages it originally supported: Julia Python and R though it now works with over 40 different programming languages.

Think of it as a digital notebook where each page can run actual code. You write a few lines hit execute and the results appear right below your code. This creates a natural flow between thinking coding and analyzing that traditional programming environments just cannot match. The architecture follows a client server model where your browser acts as the interface while a backend kernel handles all the computation work.

What makes jupyter notebook special is how it breaks down coding into manageable chunks called cells. Each cell can hold either code or formatted text using Markdown. This means you can explain what you are doing write the code to do it and show the results all in sequence. For someone learning data analysis or testing new ideas this approach feels incredibly natural.

Why It Matters in Data Science

The data science community has embraced jupyter notebook because it solves real problems that come up daily. When you are exploring a new dataset you need to try things quickly, see what works and adjust your approach. Traditional coding requires you to write everything run the entire script and hope it works. With jupyter notebook you can test one piece at a time keeping what works and fixing what does not.

Companies like Netflix Bloomberg and PayPal use jupyter notebooks to explore their massive datasets. The interactive nature means data scientists can spot patterns catch errors early and iterate faster than ever before. In my testing I noticed how much smoother the workflow became compared to writing traditional Python scripts. Loading a dataset took seconds and I could immediately start visualizing trends without switching between multiple programs.

The real breakthrough however is reproducibility. Science requires that results can be verified and replicated. Jupyter notebooks store not just your code but also your outputs and explanations. When you share a notebook with a colleague they see exactly what you did and can rerun your analysis to verify the findings. This transparency builds trust and accelerates collaboration across teams.

Jupyter Notebook at a Glance

Project Jupyter the organization behind jupyter notebook emerged from the IPython project in 2014. The team wanted to create language agnostic tools for interactive computing and the result changed how millions of people work with data. The software runs on Windows Mac and Linux making it accessible regardless of your operating system.

Installing jupyter notebook is straightforward especially if you use Anaconda which bundles Python and jupyter together. Otherwise a simple pip install jupyter command gets you started. Once installed you launch it from your terminal with jupyter notebook and your default browser opens to show a file dashboard. From there creating a new notebook takes just one click.

The interface looks clean and uncluttered. At the top you have a menu bar and toolbar with common actions like saving running cells and changing cell types. The main area shows your cells stacked vertically. Code cells have brackets with execution numbers while Markdown cells render formatted text. This simplicity means beginners can start working within minutes without feeling overwhelmed by complex interfaces.

JupyterLab: The Next Generation

While jupyter notebook remains popular JupyterLab represents the next evolution of the platform. Launched in 2018 JupyterLab provides a more powerful flexible environment while maintaining full compatibility with jupyter notebook files. The key difference is the interface: JupyterLab uses a multi document layout that lets you work with several notebooks files and terminals side by side.

In my experience JupyterLab feels like upgrading from a simple text editor to a full development environment. You get an advanced file browser drag and drop functionality and the ability to arrange your workspace however you want. Need to compare two datasets? Open both notebooks next to each other. Want to write documentation while testing code? Put a Markdown file beside your notebook. This flexibility becomes essential as projects grow more complex.

JupyterLab also includes built in extensions that add features like a visual debugger, table of contents navigation and real time collaboration tools. The dark theme option is a welcome addition for long coding sessions. Despite these advanced features JupyterLab remains approachable. If you know jupyter notebook you will feel right at home in JupyterLab within minutes.

Core Offerings That Stand Out

The cell based execution model is what sets jupyter notebook apart from traditional coding. Each cell is independent so you can run modify and rerun cells in any order. This means you can experiment freely without fear of breaking your entire workflow. During testing I found myself adjusting parameters in one cell and immediately seeing how those changes affected my visualizations in another cell.

Code cells support not just Python but also magic commands that add extra functionality. Commands like %timeit measure execution speed while %matplotlib inline displays plots directly in your notebook. These shortcuts save time and reduce the need for external tools. The kernel maintains state across all cells remembering variables functions and imported libraries so you build up your analysis step by step naturally.

Markdown cells transform jupyter notebooks from mere code containers into complete documents. You can add headers, lists, links, images and even mathematical equations using LaTeX syntax. This combination of code and narrative makes notebooks perfect for creating tutorials, research reports and educational materials. When someone reads your notebook they get both the what and the why behind your analysis.

Technology & Performance

Under the hood jupyter notebook uses a kernel to execute your code. When you run a cell your browser sends the code to the kernel which processes it and returns the results. This architecture keeps the interface responsive even when running complex calculations. The kernel persists between cell executions which is why variables remain available throughout your session.

Python is by far the most common language used with jupyter but the architecture supports dozens of kernels for languages like R Julia Scala and even JavaScript. Switching between kernels lets you use the best tool for each task. For data scientists this flexibility means they can use R for statistical modeling and Python for machine learning all within the same environment.

Performance wise jupyter notebook handles typical data science tasks smoothly. Loading datasets with thousands of rows processing them and generating visualizations happens quickly on modern hardware. However jupyter does have limitations with very large datasets or long running computations. Since everything stays in memory notebooks can become slow when working with gigabytes of data. The single threaded execution model means one slow cell blocks everything else.

Ease of Use & Accessibility

One of jupyter notebook’s greatest strengths is its accessibility. The web based interface means no complex IDE to learn no confusing project structures just open your browser and start coding. Beginners appreciate how immediate the feedback feels: write code press Shift+Enter and see results instantly. This quick iteration cycle makes learning to code much less intimidating.

Keyboard shortcuts accelerate your workflow once you learn them. Pressing A adds a cell above while B adds one below. The Escape key switches to command mode letting you navigate between cells without a mouse. These shortcuts become second nature quickly and dramatically improve productivity. The toolbar provides visual alternatives for people who prefer clicking buttons.

Accessibility extends beyond the interface. Jupyter notebooks work on any device with a browser. You can start a notebook on your desktop continue on your laptop and even view (though not edit very effectively) on a tablet. Cloud services like Google Colab let you run jupyter notebooks without installing anything locally which removes barriers for students and researchers with limited computing resources.

How Much Does Jupyter Notebook Cost?

Here is where jupyter notebook really shines: it is completely free. As an open source project under a BSD 3-Clause license you can download install modify and use jupyter without paying a single dollar. There are no premium tiers no feature restrictions and no hidden costs. This free access has democratized data science education allowing anyone with a computer to learn and practice.

The only costs come from the infrastructure needed to run jupyter. If you install it on your personal computer there are zero additional expenses. Your existing hardware and internet connection are all you need. For more demanding workloads you might choose cloud hosting which does incur costs but those are standard compute charges not jupyter licensing fees.

Google Colab offers free access to jupyter notebooks with limited GPU resources and longer sessions for around $10 per month through Colab Pro. Other cloud platforms like AWS SageMaker and Azure Notebooks charge based on the compute instances you use. These services provide powerful hardware and scalability but jupyter itself remains free regardless of where you run it. For individual learners students and small projects the local installation costs nothing.

Is It Worth the Price?

Given that jupyter notebook is free the value proposition becomes obvious: you get a professional grade data science tool without spending anything. The return on investment is immediate especially for students and self taught programmers. Even if you later decide to use paid cloud services the cost is based on computing power not the software itself.

The real investment is time rather than money. Learning jupyter notebook’s workflow keyboard shortcuts and best practices takes effort but that effort pays dividends quickly. After a few hours of practice you will navigate notebooks efficiently create visualizations effortlessly and document your work professionally. This skill transfers directly to professional environments where jupyter is often the standard tool.

For organizations jupyter notebook represents significant cost savings compared to proprietary alternatives. Teams can standardize on a free platform that integrates seamlessly with existing Python and R workflows. The vibrant open source community means bugs get fixed quickly new features arrive regularly and help is always available through forums and documentation. Checkout Context7 MCP Server to enhance your code quality workflow even further.

Strengths of Jupyter Notebook

The interactive execution model makes jupyter notebook exceptional for exploration and learning. You write a line of code see what it does and immediately adjust based on the results. This rapid feedback loop accelerates learning and reduces debugging time. During my testing I could identify and fix errors in seconds rather than minutes because I saw exactly which cell caused problems.

Visualization integration works beautifully. Libraries like Matplotlib Seaborn and Plotly render charts directly in your notebook creating a seamless analysis experience. You can generate a graph adjust the code to improve it and regenerate without leaving the notebook. For presentations and reports this means your visualizations live alongside the code that created them providing complete transparency.

The ability to mix code documentation and results in one document is transformative for collaboration and knowledge sharing. Teams can pass notebooks between members with confidence that all context and reasoning is preserved. Educational institutions use jupyter notebooks to teach programming because students see working examples with explanations right next to the code. The official jupyter website offers excellent resources for getting started.

Portability and reproducibility make jupyter notebooks ideal for scientific research. When researchers publish findings they can include the notebook that generated their results. Others can download it rerun the analysis and verify the conclusions independently. This openness strengthens the scientific process and builds trust in data driven findings.

Limitations to Keep in Mind

Version control presents challenges with jupyter notebooks. Since notebooks are stored as JSON files with lots of metadata comparing changes in Git becomes messy. You end up seeing differences in output data and cell metadata rather than just the code changes you care about. Tools exist to help with this but they add complexity to your workflow.

The non linear execution model that makes jupyter great for exploration can also lead to confusion. If you run cells out of order you might create inconsistent state where variables have unexpected values. This becomes especially problematic when sharing notebooks because someone else might run cells in a different sequence and get different results. Maintaining execution order discipline requires conscious effort.

Scalability issues emerge with large projects or datasets. Jupyter notebooks encourage putting everything in one file which can lead to massive notebooks that are slow to load and difficult to navigate. The single kernel architecture means working with truly big data or running long computations blocks your entire notebook. For production systems traditional Python scripts with proper modularity often work better.

Lack of built in IDE features frustrates some developers. Code completion is basic compared to full IDEs. There is no advanced refactoring no sophisticated debugging tools in classic jupyter notebook and limited linting. JupyterLab addresses some of these gaps but still falls short of dedicated development environments like PyCharm or VS Code for large scale software engineering.

Is Jupyter Notebook Reliable for Data Work?

For data analysis prototyping and educational purposes jupyter notebook is extremely reliable. The technology has matured over years with millions of users worldwide. Bugs are rare and when they occur the active community fixes them quickly. The simple architecture means fewer things can go wrong compared to complex IDEs.

That said reliability depends on how you use jupyter. For exploratory analysis and model prototyping it excels. The ability to save checkpoints means you can roll back if something goes wrong. Autosave helps prevent lost work though you should still manually save important notebooks regularly. I experienced no crashes or data loss during extensive testing.

For production systems jupyter notebook is not the right choice. Production code needs robust error handling automated testing and proper modularity none of which jupyter encourages. Teams typically prototype in jupyter notebooks then refactor successful code into proper Python modules for deployment. This workflow combines jupyter’s exploratory strengths with production engineering best practices.

The kernel can occasionally become unresponsive especially with infinite loops or memory intensive operations. When this happens you interrupt or restart the kernel which clears all variables. This can be frustrating but it is also a safeguard that prevents hanging processes. Learning to write efficient code and clear variables when finished helps prevent kernel issues.

Customer Support & Community

Jupyter benefits from an exceptionally strong open source community. The official documentation is comprehensive covering installation basic usage and advanced features. Real Python DataCamp and other educational sites offer detailed tutorials that walk you through common tasks step by step. These resources make learning jupyter accessible even for complete beginners.

When you encounter problems Stack Overflow has thousands of answered questions about jupyter notebook. The Jupyter Discourse forum provides a place to ask questions discuss features and share tips. GitHub issues let you report bugs or request features directly from the development team. Response times vary but the community is generally helpful and supportive.

For organizations that need guaranteed support commercial options exist. Companies like Anaconda offer enterprise support subscriptions. Cloud platforms that host jupyter often include support as part of their service. However most users find that community resources answer their questions without needing paid support.

The ecosystem around jupyter is rich with extensions plugins and complementary tools. Extensions add functionality like table of contents generation code formatting and collaboration features. Services like nbviewer let you share notebooks as static web pages. This thriving ecosystem means jupyter continues evolving with new capabilities appearing regularly.

What Else Can You Consider?

Google Colab provides a jupyter notebook environment in the cloud with free GPU access. It integrates tightly with Google Drive making file management simple. The main limitation is session duration and resource availability especially on the free tier. For students and individuals without powerful local hardware Colab removes barriers to entry.

VS Code with the Jupyter extension combines notebook functionality with a powerful IDE. You get advanced code completion debugging and git integration alongside the ability to run notebook cells. This hybrid approach appeals to developers who want jupyter’s interactivity without sacrificing IDE features. The learning curve is steeper but the productivity gains can be substantial.

JetBrains DataSpell and PyCharm Professional also support jupyter notebooks with excellent IDE integration. These paid tools provide professional features like intelligent code completion advanced debugging and database tools. For teams already using JetBrains products the integration is seamless though the cost may be prohibitive for individuals.

DeepNote and Observable offer collaborative notebook environments designed for teams. They emphasize real time collaboration version control and easier sharing compared to traditional jupyter. These platforms work well for data teams but require subscription fees and lock you into their ecosystem.

How Jupyter Stacks Up Against Competitors

Compared to traditional IDEs jupyter notebook trades advanced features for simplicity and interactivity. You give up sophisticated refactoring and debugging but gain immediate visual feedback and easier exploration. For data science work this tradeoff usually makes sense. Scientists and analysts care more about understanding data than writing perfect production code.

Against cloud notebook services like Google Colab jupyter notebook offers more control and privacy. Running locally means your data never leaves your computer and you are not dependent on internet connectivity. However you miss out on free GPU access and collaborative features that cloud services provide. The choice depends on your priorities and resources.

JupyterLab itself represents the best of both worlds for many users. It maintains jupyter notebook’s core strengths while adding IDE like features and better workspace management. Unless you specifically need the simplicity of classic jupyter notebook JupyterLab is generally the better choice moving forward.

Should You Choose Jupyter Notebook for Your Project?

If you are doing data analysis machine learning experimentation or learning to code then yes jupyter notebook is an excellent choice. The interactive workflow makes exploration natural and the visual feedback helps you understand your data faster. For education research and prototyping jupyter notebook has become the industry standard for good reason.

For building production applications jupyter notebook is not the right tool. Once you have a working prototype you should refactor your code into proper Python modules with tests and error handling. Jupyter excels at the creative exploratory phase but production systems need more structure and reliability.

The free price point means there is essentially no risk in trying jupyter notebook. Install it spend an afternoon working through tutorials and see if the workflow suits you. Most people find that jupyter transforms how they approach data problems making the entire process more interactive transparent and enjoyable.

Final Thoughts

After extensive testing I can confidently say jupyter notebook deserves its reputation as a cornerstone tool for data science. The combination of interactive execution rich visualization and integrated documentation creates a workflow that feels natural and productive. Seeing results immediately as you write code changes how you think about programming making it more exploratory and less intimidating.

The limitations around version control and scalability are real but they do not diminish jupyter’s value for its intended use cases. Understanding these tradeoffs lets you use jupyter where it excels: exploration learning and communication. For these purposes jupyter notebook and jupyterlab remain unmatched

What impressed me most was how jupyter democratizes data science. The free open source nature combined with the gentle learning curve means anyone can start analyzing data creating visualizations and building models. This accessibility has opened doors for countless people who might otherwise never have explored programming or data analysis