Context7 MCP server is changing how developers interact with AI coding assistants and the shift feels massive. Traditional AI code editors like Cursor, Claude or GitHub Copilot often generate code based on training data that can be months or even years old. This creates a frustrating cycle where you ask for help, get confident looking code and then spend hours debugging because the API changed three versions ago

Context7 solves this by pulling fresh documentation directly from official sources in real time. When you type “use context7” in your prompt the server fetches current, version specific documentation and injects it straight into your AI’s context window. It is like giving your assistant a direct line to the source instead of relying on memory

The impact is immediate however it goes beyond just getting newer information. Context7 uses a proprietary ranking algorithm called c7score that filters out noise and delivers only the most relevant code snippets. This means your AI gets concise, working examples instead of walls of text that waste tokens and confuse the model

How Context7 MCP Works Behind the Scenes

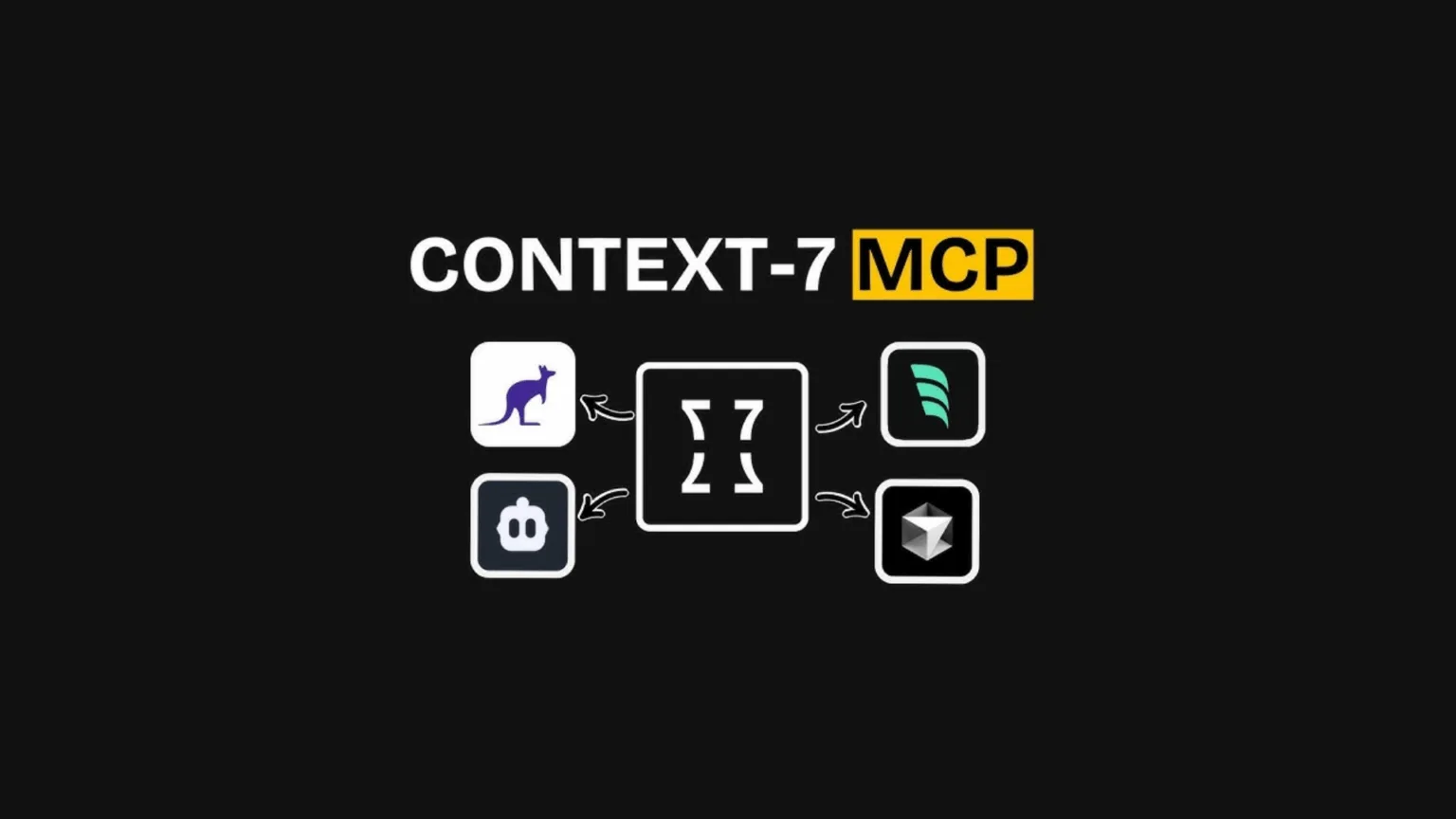

Understanding the mechanics helps you appreciate why this tool feels so different. Context7 operates as an MCP (Model Context Protocol) server which is an open standard introduced by Anthropic in 2024. MCP creates standardized connections between AI applications and external data sources, making integrations cleaner and more reliable

When you make a request Context7 follows a multi step pipeline. First it identifies the library you are asking about through the “resolve library id” tool. Then it looks up the latest version of the official documentation for that specific library. The system parses and cleans the content, removing irrelevant sections and formatting issues

Next comes the secret sauce. The c7score algorithm ranks each documentation snippet based on relevance, code quality and freshness. Only the top ranked results get cached in Upstash Redis for lightning fast delivery on subsequent requests. This entire process happens in milliseconds so you barely notice the delay

What is impressive is the scale. Context7 currently indexes documentation for over 33,000 libraries across all major programming languages including JavaScript, TypeScript, Python, Java, Go and more. Whether you are working with popular frameworks like Next.js or obscure packages that LLMs were never trained on Context7 has you covered

Real World Benefits for Developers and Teams

The practical advantages become clear once you start using Context7 in your daily workflow. One developer on Reddit shared how they were stuck for hours debugging NextAuth code that Cursor kept generating with deprecated APIs. After adding Context7 the same task took minutes because the AI finally had access to current best practices

This is not just about saving time although that alone is significant. Context7 actively prevents technical debt by ensuring generated code uses the latest recommended patterns. When your AI assistant suggests code with outdated methods it is injecting problems that will haunt your codebase for months. Context7 stops this at the source

For teams the benefits multiply. New engineers can trust the AI to teach them current conventions for any library, dramatically reducing onboarding time. Code reviews become faster because fewer pull requests contain deprecated API calls. The overall quality of your codebase improves without extra effort

Cost savings matter too especially if you are running a development team or building AI powered tools. Context7 is completely free for personal use with a generous free tier offering 100 requests per day. Pro plans start at 10,000 requests daily for teams that need higher volume. Compared to the hours saved debugging and rewriting code the ROI is obvious

Setting Up Context7 with Your Favorite AI Tools

Getting started with Context7 is surprisingly straightforward and works with most MCP compatible AI tools. For Cursor users the setup takes about two minutes. Open Cursor settings, navigate to the MCP section and click “Add new global MCP server.” Paste the configuration into your .cursor/mcp.json file

The recommended configuration looks like this: you specify “npx” as the command and add “-y @upstash/context7-mcp@latest” to the args array. If you run into issues with npx some users report better results using “bunx” instead which handles scoped packages more reliably

Claude Desktop users have two options. You can connect to the remote MCP server by adding Context7 as a custom connector with the URL https://mcp.context7.com/mcp. Alternatively install it locally by editing your claude_desktop_config.json file and adding the server configuration with your API key

For VS Code with GitHub Copilot or other MCP enabled editors the process follows similar patterns. The key is making sure your editor supports the Model Context Protocol which most modern AI coding assistants do. Once configured you simply add “use context7” to any prompt where you need up to date documentation

One helpful tip is setting up custom rules in your AI assistant to query Context7 only when needed. This saves tokens and prevents unnecessary API calls. For example you can configure it to automatically fetch documentation when you mention specific libraries or ask for code examples

Who Should Pay Attention to Context7 Right Now

Context7 is particularly valuable for certain types of developers and teams. If you work with rapidly evolving frameworks like Next.js, React or FastAPI where APIs change frequently this tool becomes essential. The frustration of getting outdated code suggestions disappears completely

Startup developers and solo entrepreneurs building products quickly will appreciate the velocity boost. When you are trying to ship features fast the last thing you need is spending half your day verifying whether AI generated code actually works with current library versions. Context7 eliminates that friction

Developers working with lesser known libraries or niche packages get huge value too. LLMs often hallucinate completely when asked about tools they were not extensively trained on. Context7 levels the playing field by pulling real documentation regardless of how popular the library is

For experienced developers who already know their tools well Context7 still helps when exploring new libraries or staying current with updates. Instead of manually reading changelog after changelog you can ask your AI assistant specific questions and trust the answers because they are based on current docs

The tool also shines in educational contexts. Coding bootcamps and online courses can recommend Context7 to students, ensuring they learn current best practices instead of patterns that were relevant two years ago. This reduces confusion and builds better habits from day one

The Bigger Picture: MCP Servers and the Future of AI Development

Context7 represents something larger than just better documentation access. It is part of a growing ecosystem of MCP servers that are fundamentally changing how AI applications connect to the outside world. The Model Context Protocol is becoming the standard way to give AI models access to external tools and data sources

Before MCP every AI tool needed custom integrations for each data source or API. This created brittle connections that broke frequently and required constant maintenance. MCP standardizes the communication layer so any MCP compatible client can talk to any MCP server. This modularity is powerful

Major players like Anthropic, OpenAI and Microsoft are all embracing MCP which suggests it is not going away. For developers this means investments in MCP servers like Context7 will remain valuable as the ecosystem matures. You are not betting on a proprietary solution that might disappear

The practical implications extend beyond coding. MCP servers are being built for database access, cloud infrastructure management, security operations and more. Context7 focuses specifically on documentation because that is where AI coding assistants struggle most but the same principles apply across domains

What is exciting is seeing how this technology enables AI agents to become genuinely useful rather than just impressive demos. When your AI can pull current data, execute real actions and access specialized knowledge it transforms from a chatbot into an actual teammate that gets work done

For India based developers and tech entrepreneurs building products this is particularly relevant. The ability to develop faster with fewer resources levels the playing field against larger competitors. Context7 and similar MCP tools give small teams capabilities that previously required extensive infrastructure

Understanding the Limitations and Trade offs

While Context7 solves major problems it is important to understand what it does not do. The tool fetches and formats documentation but it does not magically make your AI smarter about architecture decisions or complex problem solving. It provides better context not better reasoning

The free tier limit of 100 requests per day works fine for individual developers but teams might hit that ceiling quickly. If you are running an AI powered development tool or have multiple developers using Context7 constantly you will need to budget for the Pro plan. The pricing is reasonable but it is worth planning ahead

Context7 currently focuses on public libraries and frameworks. If you are working with proprietary internal documentation or company specific tools you can not use Context7 for those. However you could potentially build your own MCP server following similar principles which is where the open source nature of MCP becomes valuable

Token consumption is another consideration. While Context7’s c7score algorithm optimizes for conciseness you are still injecting additional context into every relevant prompt. This increases token usage compared to relying solely on the model’s training data. For most developers the accuracy gain justifies the cost but it is worth monitoring

Some users report occasional issues with the resolve library id step especially for packages with similar names or multiple versions. The system usually handles this well but you might need to be specific about which library you want. Explicitly mentioning version numbers helps

Comparing Context7 to Alternative Approaches

Before Context7 developers had a few options for dealing with outdated AI suggestions. The most common approach was manually copying documentation into prompts which worked but was tedious and inconsistent. You had to remember to do it, find the right docs and paste relevant sections

Some developers built custom RAG (Retrieval Augmented Generation) systems that indexed their project documentation. This works well for proprietary code but requires significant setup and maintenance. Context7 gives you similar benefits for public libraries without the infrastructure overhead

Tools like Codeium and Tabnine offer specialized code completion that is trained on more recent data than base models. These help but they still rely on training cutoffs and do not pull real time documentation. Context7 complements these tools rather than replacing them

Recently a developer on Reddit built an alternative to Context7 that claimed 40% lower costs with similar quality. Competition is healthy and having options benefits everyone. However Context7’s advantage is its extensive library coverage (33,000+ libraries) and the maturity of its c7score ranking algorithm

Compared to simply using newer base models like GPT 4 or Claude 3.5 Context7 still wins because even the latest models have training cutoffs. A model trained six months ago does not know about changes from last week. Context7 bridges that gap regardless of which base model you use

For developers in India considering cost efficiency Context7’s free tier is genuinely usable for personal projects and side hustles. Only when you scale to team usage or commercial applications do you need paid plans. This makes it accessible for developers at all budget levels

Final Thoughts on Context7’s Impact

Context7 MCP server represents a meaningful step forward in making AI coding assistants actually reliable. The difference between getting code that works versus code that looks right but fails is the difference between useful and frustrating. Context7 dramatically shifts the balance toward useful

What impresses most is how well it solves a specific problem without trying to do everything. Instead of building yet another AI coding tool the creators focused on fixing the outdated documentation issue that plagues all AI assistants. This focused approach makes it easy to integrate into existing workflows

For developers who spend significant time with AI coding tools adding Context7 feels like upgrading from dial up to fiber internet. The core activity stays the same but everything moves faster with fewer interruptions. Code suggestions work on the first try more often and debugging sessions shrink noticeably

The broader implications for AI development workflows are significant too. As MCP servers proliferate we will see AI assistants that can reliably access current information across domains not just documentation. This transforms AI from impressive party trick to legitimate productivity multiplier

Whether you are a solo developer building your next app, a student learning to code or part of a development team shipping features daily Context7 deserves attention. The setup takes minutes, the free tier is generous and the quality improvement is immediate. In a space full of overhyped AI tools this one actually delivers

For anyone curious about exploring Context7 further check out context7 to get started. And if you are interested in other AI powered tools that are changing how we work don’t miss WeatherNext 2 which brings similar innovation to weather forecasting